As more solar energy systems are installed across the U.S., scientists are quantifying the effects on wildlife. Current data collection methods are time-consuming, but the U.S. Department of Energy’s (DOE) Argonne National Laboratory has been awarded $1.3 million from DOE’s Solar Energy Technologies Office to develop technology that can cost-effectively monitor avian interactions with solar infrastructure.

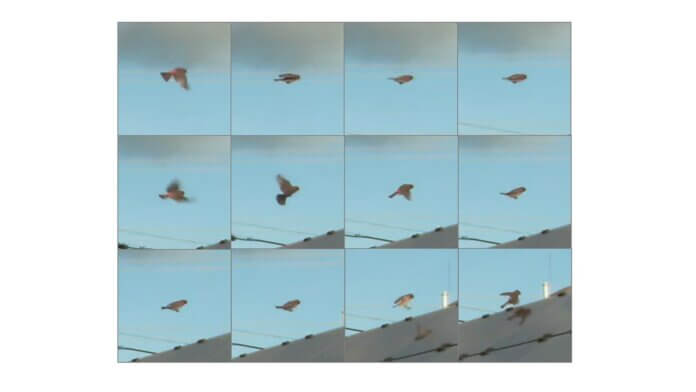

The three-year project, which began this spring, will combine computer vision techniques with a form of artificial intelligence (AI) to monitor solar sites for birds and collect data on what happens when they fly by, perch on or collide with solar panels.

The automated avian monitoring technology is being developed in collaboration with Boulder AI, a company with a depth of experience producing AI-driven cameras and the algorithms that run on them. Several solar energy facilities also support the project by providing permission to collect video and evaluate the technology onsite.

“There is speculation about how solar energy infrastructure affects bird populations, but we need more data to scientifically understand what is happening,” says Yuki Hamada, a remote-sensing scientist at Argonne, who is leading the project.

An Argonne study published in 2016 estimated, based on the limited data available, that collisions with photovoltaic panels at U.S. utility-scale solar facilities kill between 37,800 and 138,600 birds per year. While that’s a low number compared with building and vehicle strikes, which kill hundreds of millions of birds annually, learning more about how and when those deaths occur could help prevent them.

The new project aims to reduce the frequency of human surveillance by using cameras and computer models that can collect more and better data at a lower cost. Achieving that involves three tasks: detecting moving objects near solar panels; identifying which of those objects are birds; and classifying events (such as perching, flying through or colliding). Scientists will also build models using deep learning, an AI method that creates models inspired by a human brain’s neural network, making it possible to “teach” computers how to spot birds and behaviors by training them on similar examples.

In an earlier Argonne project, researchers trained computers to distinguish drones flying in the sky overhead. The avian-solar interaction project will build on this capability, bringing in new complexities, noted Adam Szymanski, an Argonne software engineer, who developed the drone-detection model. The cameras at solar facilities will be angled toward panels rather than pointed upward, so there will be more complex backgrounds. For example, the system will need to tell the difference between birds and other moving objects in the field of view, such as clouds, insects or people.

Initially, the researchers will set up cameras at one or two solar energy sites, recording and analyzing video. Hours of video will need to be processed and classified by hand in order to train the computer model.

The Argonne project was selected as a part of the Solar Energy Technologies Office Fiscal Year 2019 funding program, which includes funding to develop data collection methods to assess the impacts of solar infrastructure on birds. A better understanding of avian-solar interactions can potentially reduce the siting, permitting and wildlife mitigation costs for solar energy facilities.

Photo: Bird movement captured in video